Deluxe: Sprint 3

Friday, May 20, 2022

Another two weeks, another sprint report. This time round I learned a whole lot about interacting with the Physics server via GDScript, and got some very cool AI stuff implemented. Also managed a little bit of cleanup, making the creature scenes clearer.

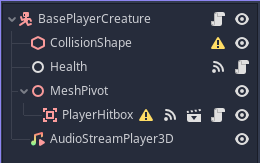

Consolidate Hitboxes and Hurtboxes

My initial creature scene used two colliders for damage - one for causing damage to another collider, and one for taking damage. The more I worked with it the more redundant it seemed, so I decided to consolidate them into a singular collider.

This was so easy to do. Thank you Scene inheritance, Godot. All I had to do was update the Physics layers checked for the Enemy/Player Hitboxes and add an

Impact_Damage

exported variable to replace the Hurtbox

Damage

export. Removed the Hurtboxes from baseline creatures and deleted the baseline Hurtbox scenes/script, and everything just worked.

Teleportation

Teleportation in VR is a solved problem in most engines, and Godot is no exception . However, I like trying to implement my own version of things occasionally, when existing implementations are a bit heavy and it's not a terribly complex thing to implement on your own. So I spun up a TeleportationManager that handles calculating when and where a player can teleport around the scene. In doing so I actually learned about a lot of features that I'll be utilizing in the future.

When the user wants to teleport, the manager runs a quick and dirty simulation of throwing an object out from the controller. The manager has parameters for the distance between each step of the throw calculation and a gravity value. On each step, starting at the controller's position and using the -Basis.Z of the controller's transform, I set a start and end point that make up a step in the arc simulation. Using the

PhysicsDirectSpaceState

, I call

intersect_ray()

and check for any collisions in this step. If there aren't any, I set the next start point at the current end point, then calculate the new end point by adding normalized vector of the difference between the previous start and end positions, the gravity multiplied by the current iteration step, and the length of the step defined by the manager. If there

is

a collision, I use the normal to verify it's a flat surface the user can teleport to, and then set the potential teleport position to the intersection position.

Using the

PhysicsDirectSpaceState

is so much cleaner to me than using a RayCast node. It's similar to the way raycasts work programmatically in Unity, and much more flexible than the RayCast node IMO. There's also options for checking intersection using shapes, which I imagine I'll be able to use for things like spherecasting.

Rendering the arc utilizes the

MultiMeshInstance

node. I'd never tried it before, and it was shockingly easy to spin up. I set the MultiMesh up with a mesh in the editor, then programmatically set the number of instances and their positions based on the cached points of the arc created during the throw simulation described above. I initially meant to use the cached data to dynamically create an arc mesh, but I kinda like the billboarded sprite look and might keep it.

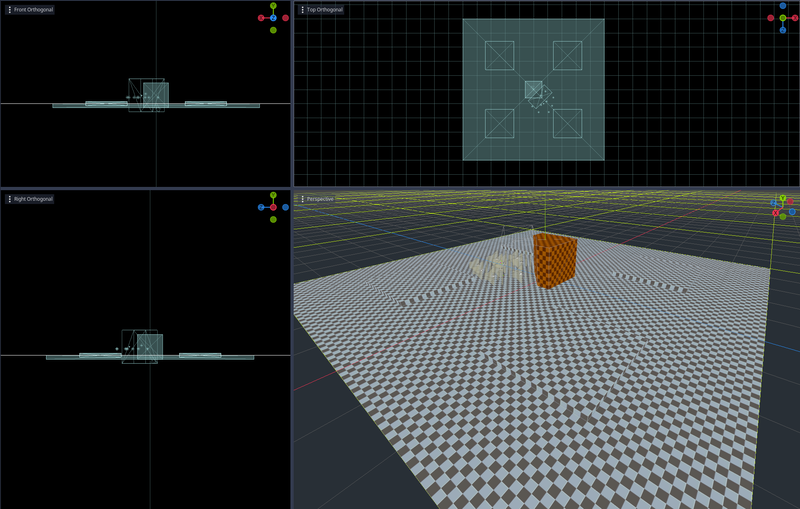

Graybox first level

Kinda wild that I've already hit a point where I need to start prototyping play spaces. The primary goal of this was to have something that a player could teleport around and enemy AI could fly about, searching for the player ship or another target of interest. CSGs were a huge help here, letting me quickly hobble together some basic geometry that's slightly more interesting than the basic meshes while still being easier and faster to hammer out than creating a small level in Blender.

Kinda wild that I've already hit a point where I need to start prototyping play spaces. The primary goal of this was to have something that a player could teleport around and enemy AI could fly about, searching for the player ship or another target of interest. CSGs were a huge help here, letting me quickly hobble together some basic geometry that's slightly more interesting than the basic meshes while still being easier and faster to hammer out than creating a small level in Blender.

Navigating Flying NPC prototype

Most of this sprint was focused on figuring out how to get the enemy AI to actually work. The initial implementation worked on a theoretical level, but was way too computationally expensive (and also overkill, as I later worked out). The followup was simpler, extensible, and what currently exists in the project.

At first I thought it'd make sense for the enemies to have a good general awareness of their immediate surrounding geometry. Not just the player, but the level as well. I started digging into raycasting as a way for the AI to "look" in various directions and navigate towards a given target. Using the

PhysicsDirectSpaceState

(accessible via any Spatial node), I set one raycast to see if there was any objects blocking the way to the AI's target and a subsequent pair of nested loops that would scan the surrounding space with calls to

intersect_ray()

. If there wasn't anything between the AI and the target, it set it's next destination to the target's position. Otherwise, the looped raycasts would search a limited distance around itself, and the point nearest the target with no collisions would be the AI's next position. Once the AI was near the position it selected, the process would repeat.

This worked in principle, but the high number of raycasts crushed the framerate (I think, more on that later). What probably would've passed as fine on desktop for a prototype was nauseating in VR, clocking in around 45 FPS on average.

So I started thinking about what I actually wanted this AI to do. After some whiteboarding, notebook doodling, and playing a bit of

Descent

, I came up with a state machine approach. Each enemy creature got an

AIBrain

node, which contains (among other things) a state the brain is currently in. Each AI tick (currently Physics ticks, because this stuff is fast) an

AIService

evaluates what the brain knows and makes decisions on whether or not to change state, and what state to change to. The brains have a bunch of knobs for twiddling, like vision distance, field of view (FOV), boredom, pain tolerance, and more, that should allow for some distinct behaviors. Additionally, it's up to the creature itself to determine just how it should move or act based on the state of the brain and what data it contains. Thanks to class inheritance and signals, I can create new creatures that all move, look, and shoot differently with (hopefully) minimal fuss.

I captured some video of the initial prototype of this in action, with a number of ships with the same brain parameters. They all begin in the "IDLE" state, have a FOV of 90 degrees and can see 1 meter, and get bored after a couple seconds. If the player ship comes into their view distance, in their field of view, AND isn't blocked by some other geometry, the enemy enters "CHASING" mode, moving very slowly while turning towards where it saw the player so it's eventually facing dead-on. If it loses sight of the player ship, either by the player moving out of it's FOV, view distance, or just moves behind a big blocking object, the enemy enters "SEARCHING" mode. In this instance, that means moving in the direction it last saw the player ship. While "SEARCHING", it's 'boredom' level is increasing ever second. If the boredom level hits or passes the brain's 'attention span' parameter, the enemy goes back to the "IDLE" state. However, if it sees the player before it gets too bored, it goes back to "CHASING".

There's additional logic for moving into weapons range to enter an "ATTACKING" state, and an "EVADING" state for moving out of the way of player projectiles or other threats, but that's not fully wired up yet and will tie into Sprint 4.

What's Next

Enemy AI attack and evasion

While the states are there, more testing is needed for attacking. Evasion also needs the logic actually implemented on the enemy, so it can do something more than just recognize it's being attacked.

Grip Swap

A holdover from this last sprint, it just got pushed as I felt the AI work was a higher priority.

Snap Turn

Another VR standard, the ability to snap turn the player in increments is super handy. I've already found myself missing it while testing AI, so this'll be a relatively high priority this sprint.

Pickups/Player Weapon Changing

Items that the player can collide with to increase health or upgrade their weapon is a big one. This will probably get split into a few different tickets, one being a rough draft of how the weapon upgrade system will work.

Wrapup

As always, things take longer than expected. Still, progress on this has been solid IMO. Thanks for following along so far. Be sure to follow me over at mastodon.gamedev.place and diode.zone for bite-sized content updates and videos as they get posted!